HDF at SciPy2015

John Readey, The HDF Group

| Editor’s Note: Since this post was written in 2015, The HDF Group has developed HDF Cloud, a new product that addresses the challenges of adapting large scale array-based computing to the cloud and object storage while intelligently handling the full data management life cycle. If this is something that interests you, we’d love to hear from you. |

Interestingly enough, in addition to being known as the place to go for BBQ and live music, Austin, Texas is a major hub of Python development. Each year, Austin is host to the annual confab of Python developers known as the SciPy Conference. Enthought, a local Python-based company, was the major sponsor of the conference and did a great job of organizing the event. By the way, Enthought is active in Python-based training, and I thought the tutorial sessions I attended were very well done. If you would like to get some expert training on various aspects of Python, check out their offerings.

As a first-time conference attendee, I found attending the talks and tutorials very informative and entertaining. The conference’s focus is the set of packages that form the core of the SciPy ecosystem (SciPy, iPython, NumPy, Pandas, Matplotlib, and SymPy) and the ever-increasing number of specialized packages around this core.

- SciPy – is a core set of numerical analysis routines

- NumPy – provides support for large multi-dimensional arrays

- Pandas – provides support for tabular data (think SQL table or spreadsheet)

- Matplotlib – is a library for 2D plotting

- iPython – provides a Matlab-style notebook for Python

- SymPy – is a library for symbolic mathematics

Most of these packages internally use compiled C or Fortran code, but the Python interface makes these libraries much easier to work with. By leveraging compiled code, using these packages enables scripts to run much faster (in some cases thousands of times faster) compared to a pure Python equivalent.

From the HDF perspective, the two most important packages in this list are NumPy and Pandas. NumPy is a package for multi-dimensional array operations. The package, h5py, maintained by Andrew Collette, serves as the bridge from the HDF5 file format and NumPy array objects. While HDF5 is not typically the main subject of SciPy talks, it is frequently mentioned as the way to load or store the data of interest. So for better or worse, HDF gets treated as an important part of the plumbing. Andrew’s talk, “HDF5 is Eating the World,” was well received and was a good review of what HDF5 is and upcoming plans for the technology. Andrew included a call out to HDF’s Compass viewer app and used it to illustrate his demo.

H5py is to NumPy as PyTables is to Pandas (if that makes sense). Pandas’ focus is columnar data – in the HDF5 context this would be one-dimensional datasets with compound types. PyTables supports reading and writing this type of data in HDF5 (either by hyper slab-style selections or reading/writing rows based on “where” expressions).

On the subject of Pandas, the keynote talk, “My Data Journey with Python” by Wes McKinney (the original developer of Pandas) is quite entertaining. Pandas is rising in importance (I think a couple of years ago it wouldn’t have been considered as a core component of the SciPy stack), and is well worth following.

Related to Pandas is a new package called xray, that is attracting a lot of attention. You can check out the talk, “xray: N D Labeled Arrays and Datasets.” Think of xray as a sort of multi-dimensional Pandas. Xray uses NetCDF4 (hence HDF5) for persistent storage.

Anthony Scopatz (the PyTables maintainer), Andrew Collette, this writer, and other interested parties met during the conference to discuss the future of PyTables. One challenge with the Open Source development model popular in the SciPy community is that it is easier to get support and contributions for new projects, compared with older projects. Older projects may be quite important and widely used, but they can have a hard time finding support for even regular maintenance activities. The HDF Group may be able to help in this effort.

Speaking of Andy Scopatz, he and Kathryn Huff have just finished writing a book on Python for the non-professional developer: Effective Computation in Physics. I skimmed through it on the plane ride back from the conference. I would recommend it for anyone involved in science or engineering who would like an introduction to Python with a focus on getting real work done. Despite the title, there’s nothing covered that requires a physics background in order to get value from the book.

One confusing aspect for Python developers interested in HDF5 is that there are two Python packages for HDF5 (roughly equal in popularity): PyTables and h5py. While they both have their own focus, there is also a lot of overlap. At the PyTables meeting we discussed modifying PyTables so that it would be layered on top of h5py. This would make it possible to get rid of much redundant HDF5 lib wrapping code.

Matplotlib is the main plotting package for Python (it is what is used by Compass for plots). I’d describe Matplotlib as being on the mature side, and the most interesting developments in the graphics area are in web-based systems, such as Continuum’s Bokeh. Watch the Bokeh presentation for some nice examples of plots with Bokeh.

Another important package is iPython, which provides something like a Matlab environment for Python programming. Most tutorials and talks consist of at least some content in the form of an iPython web-based notebook. From a cloud computing perspective, an iPython notebook makes it easy to run interactive sessions remotely (i.e. the kernel runs on a remote system, but user interaction happens through a browser interface). iPython is expanding beyond the realm of Python (under the name Jupyter) to become a language-neutral system supporting R, Ruby, Julia and other languages in addition to Python. Project Jupyter received $6 million in funding last week to continue its development. By the way, Github now supports native rendering of iPython notebooks. For example, this is one of the notebooks I used in my talk.

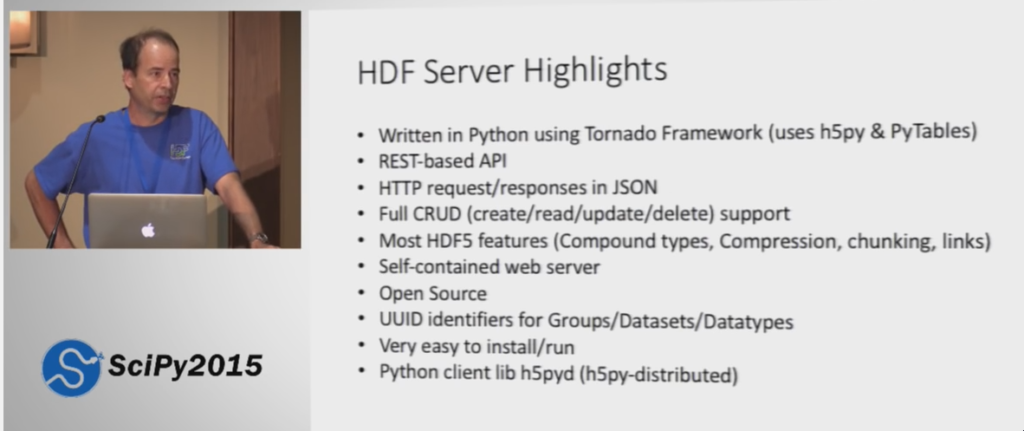

My talk on HDF Server was, I believe, well received. The slides are here, and you can watch the talk itself.

I received comments that many teams had had a need at one time or another for some form of distributed HDF, and that they are glad to see The HDF Group providing this. In my talk I also demo’ed a new library I’d just been working on (I was tweaking it even during the conference), h5pyd. The ‘d’ is for distributed h5py. The idea is that you can use a package that is 95% source code compatible with h5py, yet invokes calls over the REST API rather than using HDF5 lib. You can’t do anything with h5pyd that you couldn’t with h5py, but using it is much more convenient than using the REST API directly.

It was great getting feedback on HDF Server (h5serv) at SciPy (and likewise at the ESIP conference the week after), and I’m looking forward to incorporating some of the suggestions I’ve received in future updates of HDF Server.

Finally, some common questions/concerns about HDF that came up at the conference…

• Concurrent readers/writers – this is a common request from attendees. Andrew gave a good overview of SWMR in his talk (part of the upcoming HDF5 Release 1.10.0) and urged people to try it out if their task fits the SWMR use case.

• Journaling support for HDF5 – this is a feature that reflects a concern that an HDF5 file could get left in a corrupted, un-recoverable state if the application updating the file suddenly crashed. This is not planned feature for the upcoming 1.10 release, but will be considered for future releases.

• HDF5 backups – Large HDF5 files which get updated continually can be problematic from the backup point of view. (My take would be to address through the use of an object store – more on this in a future blog post!)

• Compression Filters – custom compression filters are a nice feature, but can be quite problematic to deal with in practice. We’ve been working with the Europe-based RDA Group to improve this. See: HDF5 External Filter Plugin Working Group.

As always, it was good to get feedback on what people liked about HDF5 and what features they would most like to see in future versions. For more information on upcoming 1.10 features, see our blog post, “What’s Coming in the HDF5 1.10.0 Release?”

The SciPy agenda also left some room for fun side activities. Here’s a pic of the riverboat cruise on Thursday evening:

Overall, the SciPy Conference is well worth attending for anyone, from novice to expert, who is interested in scientific programming with Python.

Overall, the SciPy Conference is well worth attending for anyone, from novice to expert, who is interested in scientific programming with Python.

See you next year in Austin for SciPy 2016!