John Readey, The HDF Group

| Editor’s Note: Since this post was written in 2015, The HDF Group has developed the Highly Scalable Data Service (HSDS) which addresses the challenges of adapting large scale array-based computing to the cloud and object storage while intelligently handling the full data management life cycle. Learn more about HSDS and The HDF Group’s related services. |

HDF Server is a new product from The HDF Group which enables HDF5 resources to be accessed and modified using Hypertext Transfer Protocol (HTTP).

HDF Server [1], released in February 2015, was first developed as a proof of concept that enabled remote access to HDF5 content using a RESTful API. HDF Server version 0.1.0 wasn’t yet intended for use in a production environment since it didn’t initially provide a set of security features and controls. Following its successful debut, The HDF Group incorporated additional planned features. The newest version of HDF Server provides exciting capabilities for accessing HDF5 data in an easy and secure way.

Providing a Secure System on a Network

As anyone who follows the news can attest, computer security on the Internet is a tricky subject. To maintain the integrity of data, it is mandatory that access is granted to only those clients with the proper credentials and privileges to invoke a request of a trusted server and network – especially requests that involve modifying or deleting data. To provide a secure system, there are three fundamental requirements that need to be negotiated between the HDF Server and a client – Authentication, Encryption and Authorization.

- Authentication – Is the requestor who he says he is?

- Encryption – Is the connection between the requestor and the service unreadable by any entity other than the server and the client?

- Authorization – How does the server determine if the authenticated user is permitted to perform the requested action?

This article will explore how these requirements have been implemented in HDF Server so as to store and deliver HDF5 data securely.

Authentication

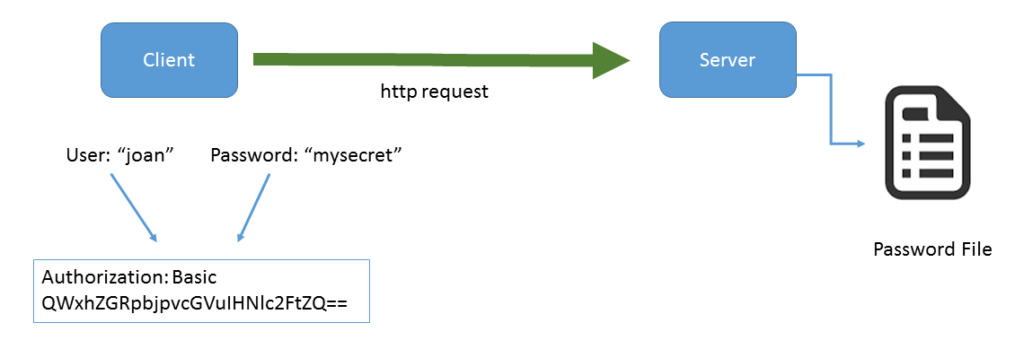

There have been various HTTP protocols developed over the years (e.g. OAuth and OpenID) to provide authentication services. One of the oldest and simplest protocols is basic access authentication. HDF Server now supports basic authentication [2] out of the box, and it is easy to plug in alternative authentication frameworks as desired.

[ Note: transmitted credentials in basic access authentication are not encrypted, but weakly “encoded.” Thus, connections to HDF Server should only be made over an HTTPS session.]

Let’s walk through an example of how basic authentication works with HDF Server.

1. A web client makes an unauthenticated request of the server (e.g. GET/).

2. If the addressed resource requires authentication, (see below for more on how that is determined) the server responds with HTTP Status 401 – Authorization Required.

3. The client encodes a user name and password, includes it in the HTTP authorization header, and re-submits the request.

4. The server compares the user name and password against its password list.

5. If the user name and password are valid and the given user is allowed to access the request, the server will return an HTTP Status 200 – OK response. Otherwise the server returns the above HTTP Status 401 – Authorization Required (if the user name/password is not valid) or HTTP Status 403 – Forbidden if the password is valid, but the requesting user is not authorized to access it.

Of course, the client is always free to include the user name and password to avoid having to repeat the request.

How does the user name and password get into the server’s password file in the first place? This necessarily has to happen via a medium outside the REST API. HDF Server provides tools that can be run on the server host to create accounts and update passwords. (For more information, see the documentation links at the end of this blog post.)

Encryption

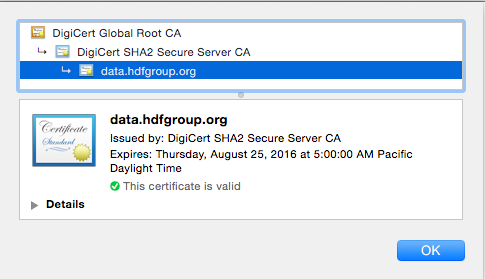

We’ve all seen some form of a padlock icon next to the URL in our favorite browsers which indicates the security status of your session on any page that is exchanging secure information (e.g. banking app, email). A regular HTTP connection communicates “in the clear,” so anyone who can intercept between your browser and the server could potentially eavesdrop on the HTTP requests being sent to the server, watching for requests with login information. This could enable the dreaded, “man in the middle attack,” which would definitely undermine any other active security measures. The attacker can snoop passwords from the request stream and effectively login as the authenticated user with their access privileges.

The solution is to provide an encrypted connection known as HTTPS or HTTP over SSL. This ensures that only the intended endpoint will be able to encrypt and decrypt any communications between the client and server. For example, if you go to the public instance of HDF Server we’ve setup at: htpps://data.hdfgroup.org:7258, you’ll note the “HTTPS” rather than “HTTP” that indicates the browser is communicating over a secure connection. Also, your browser will display the padlock icon; clicking on it should enable you to display security session information as well as the certificate that has been set up for the server endpoint. This lets you know with a high level of certainty that the organization running the server is really who they say they are. Below is the certificate issued by DigiCert displayed in Chrome for the link above, which should assure you the service is provided by The HDF Group and not by a secret cyber hacking cartel!

Authorization

Once the server has authenticated that the user is who he or she claims to be, the next step is to determine if the request should actually be granted. For example, just because “Joe” has a valid password, and has sent a request over HTTPS, it doesn’t necessarily mean that the server should honor any DELETE requests he may send.

This is where authorization comes in. With normal HDF5 files on a shared file system, this is typically handled by login controls, file ownership, and permission flags. For a web-based service however, the requesting user normally won’t have accounts on the server machine, so that model doesn’t really fit.

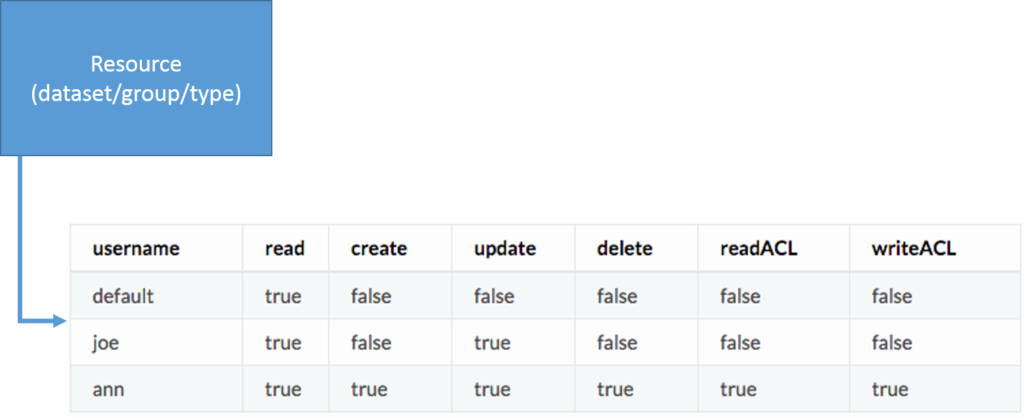

Instead, HDF Server uses an ACL (Access Control List) where each HDF5 resource (a dataset, group, or committed data type) has an associated list which contains a set of user identifiers and flags that indicate which specific actions the requesting user is allowed to perform.

Let’s look at an example of how this works:

The resource can be any dataset, group, or committed data type. In this example, there are three users in the associated ACL: “default”, “Joe”, and “Ann.” User “Joe” can read and update the resource (such as using PUT to update dataset values), but is not allowed to perform other actions (such as DELETE the resource). For example, any DELETE request on this resource from ‘Joe’ would fail with an HTTP Status 403 – Forbidden. By contrast, user “Ann” can perform any action on the resource, including updating the ACL itself.

If a third party enters the picture, say, user “Bob,” and attempts to do a GET, he won’t be found in the list of users. In this case, the special user “default” is used to stand in for “Bob” or any users who aren’t otherwise listed in the ACL. In this case the default permission for read is true, so the request is granted. The “default” permissions are also used to respond to any unauthenticated requests. For example, if the default read permission is false, any potential reader would need to send an authenticated request to do a GET on the resource (and then only if he were listed in the ACL with the read field set to true).

This system enables very fine-grained control over access for content within an HDF5 file. For example, you can have most content be readable by anyone, but only editable by a few “power users.” Or you could have some content of a file that is not readable by anyone who is not listed in the ACL, while the rest of the content is publicly readable.

However, setting up an ACL for each object within an HDF5 file is a bit tedious and hard to manage. What if “Ann” is no longer to be trusted and her permission grants need to be revoked? It would then be necessary to update the ACL of each resource in the file to remove her permissions. For situations where explicit ACLs for each resource are not needed, an ACL can be associated with just the root group. Whenever any resource is accessed in the file, if an ACL is not present for the given resource, the root group’s ACL will be used to determine if the request should be granted.

How are ACL’s created and modified? The REST API has been extended to provide an ACL resource that can be used with HTTP GET and PUT:

The request:

GET /{groups|datasets|datatypes}/<uuid>/acls

This returns the ACL for the given object as a JSON string.

To get the ACL for a particular user, add the username to the end of the request:

GET /{groups|datasets|datatypes}/<uuid>/acls/<username>

To update an ACL, use PUT:

PUT /{groups|datasets|datatypes}/<uuid>/acls/<username>

Above, the body of the request contains the ACL fields to update for that user. The readACL and writeACL fields enable or disable the ability of a given user to read and modify the ACL itself. So if the writeACL field for user “Ann” is true, “Ann” can use the PUT operation to set the writeACL field to false for herself. But then, if she sent a new request to revert the field to true, it would be denied because she no longer has permissions to do so. Be careful not to get caught in a Catch-22!

See the HDF Server documentation for all the details for using the new API operations.

The new release of HDF Server also includes some command line utilities for listing and updating ACLs: getacl.py and setacl.py. These tools don’t go through the REST API, but operate directly on the file hosted by the server. So, by using these tools the sys admin folks can rescue “Ann” from the Catch-22 scenario above. Making sure that these tools are not used in an unauthorized way requires proper system security for the server itself (e.g. by making sure that only authorized users have SUDO privileges on the host running the server).

See the administrative tools documentation for a complete description of how to use the new tools.

Conclusion

This has been a brief tour of some exciting new features for HDF Server. Our hope is that these features will enable the use of HDF Server (and HDF5) in ways that may not have been possible before.

If you would like to try it out yourself, you can easily set up HDF Server on your own computer by following the instructions.

Then create a password file and user accounts using the Admin tools. This test case illustrates how to use authentication and authorization with HDF Server: https://github.com/HDFGroup/h5serv/blob/master/test/integ/acltest.py

As always, we welcome any questions or comments you might have, below!

1 – HDF5 for the Web – HDF Server

2 – https://en.wikipedia.org/wiki/Basic_access_authentication