Webinar Followup: Parallel I/O with HDF5 and Performance Tuning Techniques

On June 26, 2020, The HDF Group employee, Scot Breitenfeld presented a webinar called "Parallel I/O with HDF5 and Performance Tuning Techniques." ...

On June 26, 2020, The HDF Group employee, Scot Breitenfeld presented a webinar called "Parallel I/O with HDF5 and Performance Tuning Techniques." ...

The HDF Group (HDF®), the maintainers and creators of the open source HDF5 library and file format, has joined the COVID-19 High Performance Computing (HPC) Consortium as an affiliate to provide expertise that can enhance and accelerate COVID-19 research....

A slide deck and recording for the June 5, 2020 webinar, "An Introduction to HDF5 in HPC Environments."...

Damaris is a middleware that enriches existing HPC data format libraries (e.g. HDF5) with data aggregation and asynchronous data management capabilities. At the same time, it can be employed for in situ analysis and visualization purposes. ...

The HDF Group’s HDF Server has been nominated for Best Use of HPC in the Cloud, and Best HPC Software Product or Technology in HPCWire’s 2016 Readers’ Choice Awards. HDF Server is a Python-based web service that enables full read/write web access to HDF data – it can be used to send and receive HDF5 data using an HTTP-based REST interface. While HDF5 provides powerful scalability and speed for complex datasets of all sizes, many HDF5 data sets used in HPC environments are extremely large and cannot easily be downloaded or moved across the internet to access data on an as-needed basis. Users often only need to access a small subset of the data. Using HDF Server, data can be kept in one...

Pearah joins The HDF Group as new Chief Executive Officer

Champaign, IL — The HDF Group today announced that its Board of Directors has appointed David Pearah as its new Chief Executive Officer. The HDF Group is a software company dedicated to creating high performance computing technology to address many of today’s Big Data challenges.

Pearah replaces Mike Folk upon his retirement after ten years as company President and Board Chair. Folk will remain a member of the Board of Directors, and Pearah will become the company’s Chairman of the Board of Directors.

Pearah said, “I am honored to have been selected as The HDF Group’s next CEO. It is a privilege to be part of an organization with a nearly 30-year history of delivering innovative technology to meet the Big Data demands of commercial industry, scientific research and governmental clients.”

Industry leaders in fields from aerospace and biomedicine to finance join the company’s client list. In addition, government entities such as the Department of Energy and NASA, numerous research facilities, and scientists in disciplines from climate study to astrophysics depend on HDF technologies.

Pearah continued, “We are an organization led by a mission to make a positive impact on everyone we engage, whether they are individuals using our open-source software, or organizations who rely on our talented team of scientists and engineers as trusted partners. I will do my best to serve the HDF community by enabling our team to fulfill their passion to make a difference. We’ve just delivered a major release of HDF5 with many additional powerful features, and we’re very excited about several innovative new products that we’ll soon be making available to our user community.”

“Dave is clearly the leader for HDF’s future, and

We are excited and pleased to announce HDF5-1.10.0, the most powerful version of our flagship software ever.> This major new release of HDF5 is more powerful than ever before and packed with new capabilities that address important data challenges faced by our user community. HDF5 1.10.0 contains many important new features and changes, including those listed below. The features marked with * use new extensions to the HDF5 file format. The Single-Writer / Multiple-Reader or SWMR feature enables users to read data while concurrently writing it. * The virtual dataset (VDS) feature enables users to access data in a collection of HDF5 files as a single HDF5 dataset and to use the HDF5 APIs to work with that dataset. * (NOTE:...

HDF5 began out of a collaboration between the National Center for Supercomputing Applications (NCSA) and the US Department of Energy’s Advanced Simulation and Computing Program (ASC), so high-performance computing (HPC) I/O has been in our focus from the very beginning. As we are starting our 20th year of development on HDF5, HPC I/O continues to be a critical driver of new features.

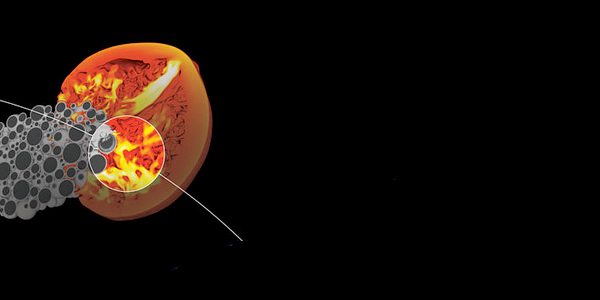

Los Alamos National Laboratory is home to two of the world’s most powerful supercomputers, each capable of performing more than 1,000 trillion operations per second. Here, ASC is examining the effects of a one-megaton nuclear energy source detonated on the surface of an asteroid. Image from ASC at http://www.lanl.gov/asci/

The HDF5 development team has focused on three things when serving the HPC community: performance, freedom of choice and ease of use.

The current improvement of using collective I/O to reduce the number of independent processes accessing the file system helped to improve the metadata reads for cgp_open substantially, yielding 100-1000 times faster execution times over the previous implementation....

What costs applications a lot of time and resources rather than doing actual computation? Slow I/O. It is well known that I/O subsystems are very slow compared to other parts of a computing system. Applications use I/O to store simulation output for future use by analysis applications, to checkpoint application memory to guard against system failure, to exercise out-of-core techniques for data that does not fit in a processor’s memory, and so on. I/O middleware libraries, such as HDF5, provide application users with a rich interface for I/O access to organize their data and store it efficiently. A lot of effort is invested by such I/O libraries to reduce or completely hide the cost of I/O from applications.

Blue Waters supercomputer at the National Center for Supercomputing Applications, University of Illinois, Urbana-Champaign campus. Blue Waters is supported by the National Science Foundation and the University of Illinois.

First, to leverage parallel I/O, it is very important that you have a parallel file system;